I built NerdQA out of frustration with opaque AI research tools. I wanted an engine where every claim is traceable, every source is cited, and the reasoning path is transparent. This open-source system delivers that, using hierarchical citations – developed and released 6 months prior to similar major industry announcements.

🔍 Open Source Research Tool

NerdQA is ready to change your research process with transparent, verifiable citations. Full setup guide and source code are on GitHub.

Overview

NerdQA is a deep research automation system that I implemented 6 months before OpenAI announced its Deep Research agent. This groundbreaking system enables verifiable web research through hierarchical citation graphs, providing a deterministic, fully transparent alternative to black-box research agents, creating traceable, attributable reasoning chains with direct citations to original sources.

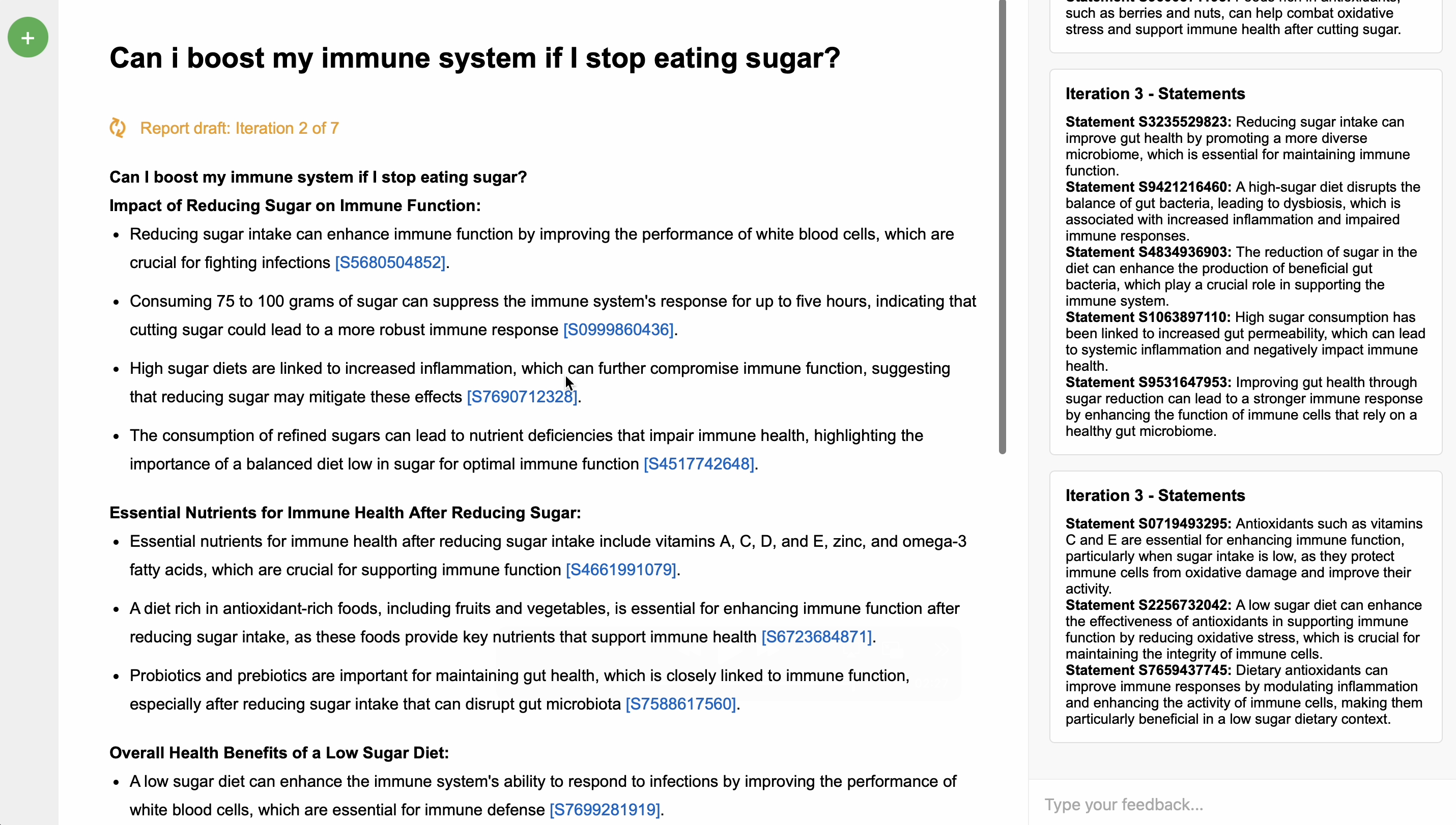

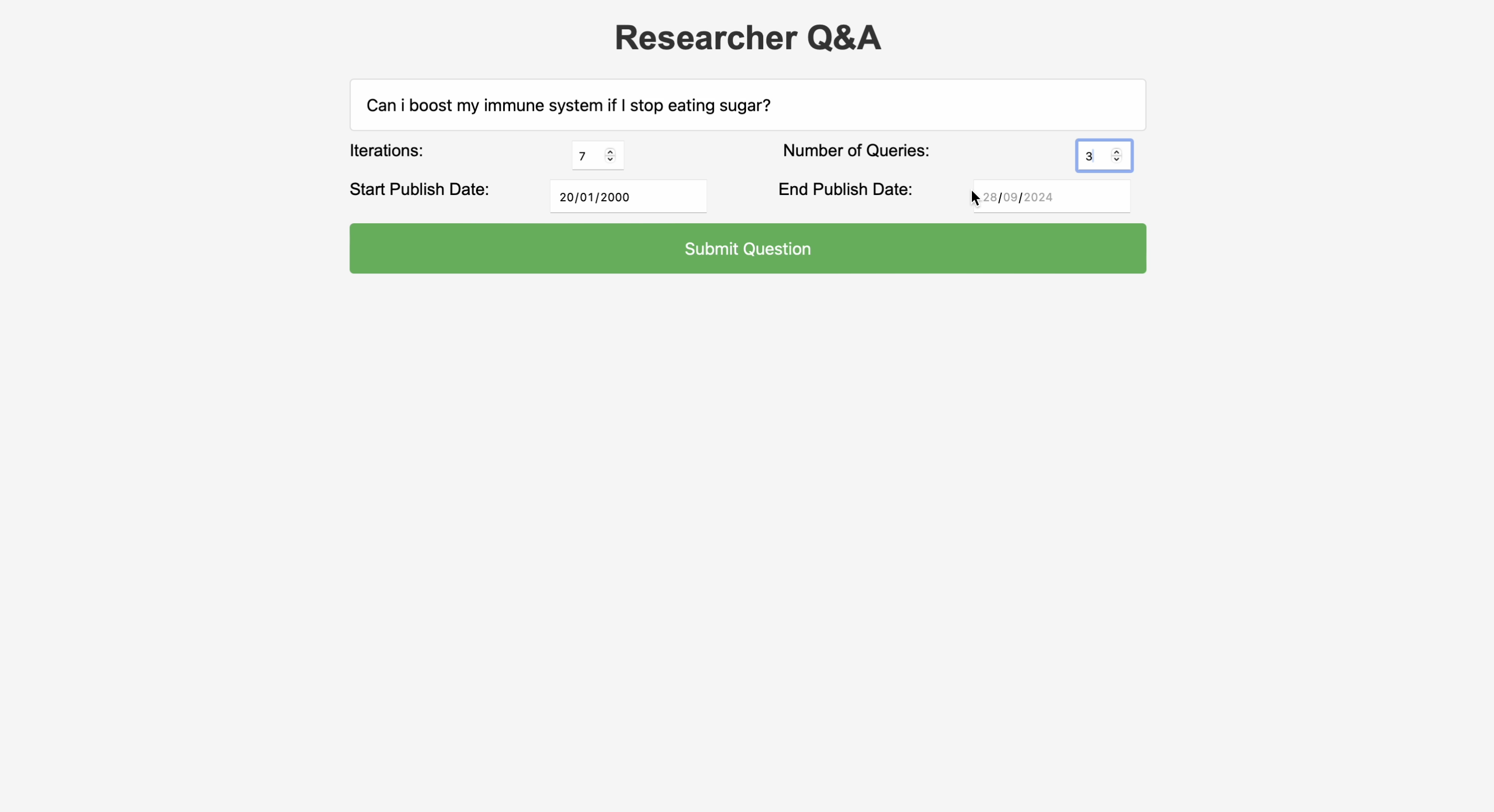

NerdQA’s intuitive interface for submitting research queries with customizable parameters

NerdQA’s intuitive interface for submitting research queries with customizable parameters

Key Features

- Transparent Reasoning: Every conclusion is backed by a fully verifiable citation tree

- Source Attribution: All information is directly linked to primary web sources

- Hierarchical Citations: Complex questions are broken down into sub-questions with their own evidence trees

- Deterministic Research: Reproducible research paths for consistent, verifiable results

- Alternative to Black-Box Agents: Brings transparency to AI-assisted research (developed 6 months before OpenAI’s Deep Research)

- Cost-Effective: Designed to run efficiently, potentially on smaller/cheaper LLMs

Why Not Agent-Based?

Unlike common agent-based approaches, NerdQA uses an engineered LLM workflow in a loop that can explore research questions both vertically (for a predefined number of steps) and horizontally (making a defined number of parallel queries). This approach offers several advantages:

- Deterministic Behavior: More predictable outcomes with less randomness

- Easier Troubleshooting: Clear workflow steps make debugging simpler

- Cost Efficiency: Optimized prompts and controlled token usage keep costs low

- Simplified Development: Built as a budget-free pet project in personal time

- Model Flexibility: Feasible to run on cheaper, smaller LLMs

What Makes NerdQA Unique

The most novel aspect of NerdQA is its hierarchical statement architecture. When generating intermediate or final reports, the LLM can cite not only original web sources but also intermediate statements that are built on top of other statements and sources. This creates citation trees that can:

- Unlock complex conclusions not explicitly stated in sources

- Support reasoning that builds safely on multiple pieces of evidence

- Be extended to generate novel solutions by producing both strict synthesis and hypotheses

Comprehensive tracing of the hierarchical research process through Langfuse integration

Comprehensive tracing of the hierarchical research process through Langfuse integration

Technical Implementation

Tech Stack

- Core: Custom LLM framework for orchestration (no dependencies on LangChain, etc.)

- LLM: LiteLLM proxy for reliable API access with fallbacks and Redis caching

- Search: Exa Search for web search capabilities

- Reranking: Cohere Reranker for improved search result relevance

- Monitoring: Langfuse for comprehensive LLM tracing

- Frontend: Vanilla JavaScript for a lightweight, customizable UI

System Architecture

NerdQA consists of several key components:

- Pipeline: Orchestrates the research workflow, managing statement generation, query generation, and answer synthesis

- Statement Generator: Creates factual statements based on search results

- Query Generator: Formulates follow-up queries based on current research state

- Answer Generator: Synthesizes final answers with citations to statements and sources

- Web Server: Provides API endpoints for interacting with the system

- Frontend: Simple, customizable vanilla JavaScript interface

The system uses a loop-based approach where each iteration:

- Generates queries based on the research question

- Searches for relevant information

- Creates statements from search results

- Builds on previous statements to generate deeper insights

- Eventually synthesizes a comprehensive answer

Advanced Features

- Citation Trees: Click on citations in the final report to explore the evidence chain

- Feedback: Provide feedback on answers to improve future research

- Custom Search Providers: The system supports multiple search backends including Exa Search, Brave Search, and OpenAlex

- LLM Tracing: With Langfuse integration, you can inspect all LLM calls, prompts, token usage, timing, and more

- Caching: Redis-based LLM response caching to improve performance and reduce API costs